When it comes to the visibility of a website in search engines, many people first think of keywords, backlinks or content strategies. What is often overlooked is how important the correct control of search engine crawlers is. This is exactly where robots.txt comes into play: a small text file with a big impact.

It decides which areas of your website are recorded by Google and Co. and which are not - and can therefore have a direct influence on your website performance. WordPress Pagespeedyour rankings and the efficiency of crawling. In this article, I will show you why robots.txt is so important, how it is structured and what you should pay attention to in order to exploit the full potential of your website.

What is robots.txt?

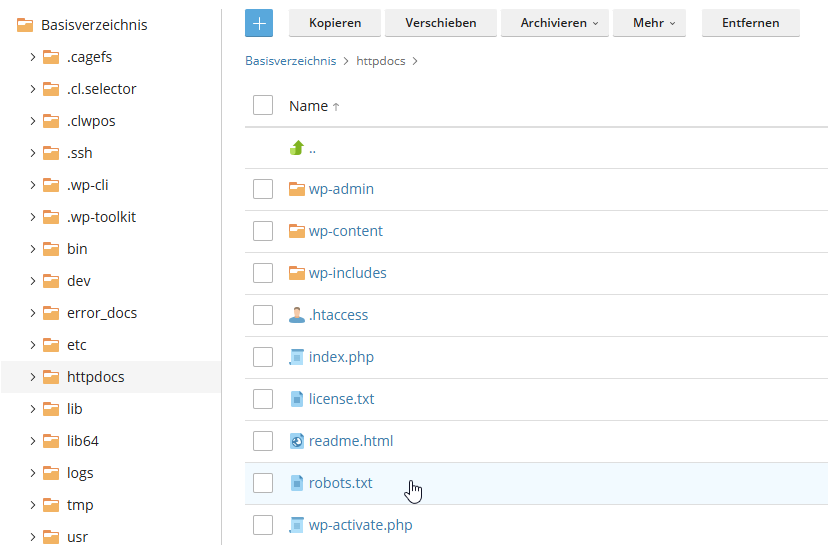

The robots.txt is a small text file that resides on your website (usually in the httpsdocs folder in the root directory of your WordPress installation). You can access the main directory either directly via your WordPress hosting or via FTP. The robots.txt is used to prevent search engine crawlers (such as the Googlebot), social media (such as TikTok or Facebook) or AI (such as Chat GPT crawlers) which pages or areas of your website they may or may not index.

Where is robots.txt used?

The robots.txt is used wherever Search engines or other crawlers are to be controlledso always on websites. To be more precise:

- On your own website

It lies in the Main directory of the website, e.g:

https://www.deine-website.de/robots.txt

As soon as a crawler visits your site, it first looks here to see if there are any special rules.

- Typical areas of application

- WordPress-websites:

Areas such as /wp-admin/, /wp-includes/ or plugin folders can be blocked so that they do not appear in search engines. - E-commerce stores:

Shopping cart pages, internal filter pages or test pages are often excluded. - Company websites:

Admin areas or private subpages that are not intended for the public. - Development or test server:

Sites that are still under construction can be completely blocked (Disallow: /).

- WordPress-websites:

How is robots.txt structured?

The robots.txt works according to a clear, standardized structure: It consists of instructions that tell the search engine crawlers which areas of a website they may and may not search.

Basically, a robots.txt consists of blocks, each of which applies to one or more crawlers. Each block begins with the line User-agent, followed by the name of the crawler to which the rules apply.

The asterisk * is used to address all crawlers simultaneously. This is followed by the actual instructions, usually in the form of Disallow or Allow. Disallow is used to specify a path that the crawler should not search, while Allow explicitly releases paths, even if the parent folder is locked.

This is the standard robots.txt in WordPress:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.phpLegend:

User agent → for which crawler the rule applies (* means all crawlers)

Disallow → Paths that should not be crawled

Allow → Paths that are explicitly allowed (useful if a subfolder within a restricted area should be allowed)

With every WordPress installation, a standardrobots.txt-file is created. The entry Disallow: /wp-admin ensures that your sensitive admin dashboard remains protected from search engine crawlers. This means you don't have to create your own file, but can use the automatically and dynamically generated variant.

Important note for WordPress users: This file is created dynamically and is not stored as a fixed file on your server. This means that you cannot simply access and edit the standard file via FTP or via your WordPress hosting interface.

Important to know:

- No safety measure:

robots.txt only prevents crawling, not access to the pages. Anyone who knows the URL can still access it. - Search engines can ignore rules:

Some crawlers do not follow the rules. - Not for complete indexing control:

To completely remove content from search engines, you should also use noindex meta tags.

Examples of a robots.txt

You have now seen the standard version. You have many options with robots.txt, so I would like to show you five examples that you can use to optimize your robots.txt for SEO, security and performance.

Example 1: Protect admin area

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.phpThis actually corresponds to the standard robots.txt from WordPress. With these lines of code, robots.txt protects the WordPress admin area of your website from crawlers. This means that sensitive files from the backend are not indexed. The exception admin-ajax.php allows crawlers to reach this important endpoint, e.g. for certain plugin or sitemap calls, while the rest of the admin area remains protected.

Example 2: Blocking directories for media

User-agent: *

Disallow: /wp-content/uploads/private/In this example, the upload directory "private", in which confidential images or data are saved, is blocked for all crawlers and search engines. All other uploaded files will continue to be crawled as usual.

Example 3: Exclude development or staging areas

# Block 1: Googlebot

User-agent: Googlebot

Disallow: /staging/

Disallow: /dev/

# Block 2: All other bots

User-agent: *

Allow: /💡Note: The "#" serves exclusively as a comment character. Anything after a # is ignored by search engines and not executed. This means that files such as robots.txt or .htaccess more clearly.

This example shows a robots.txt file with two blocks. The first block prohibits the Googlebot from crawling development or staging areas. The second block allows all other bots to crawl the website.

Example 4: Hide categories and keywords

User-agent: *

Disallow: /category/

Disallow: /tag/With this example, you prohibit search engines from crawling category and keyword archives that are automatically created by WordPress and are often not actively used. These pages often generate Duplicate contentbecause the same posts can be accessed via the start page, categories, tags as well as via the actual post URL.

Example 5: Blocking certain file types

User-agent: *

Disallow: /*.pdf$

Disallow: /*.zip$

This example blocks PDF and ZIP files before crawling. This can be useful if you Download materials or internal documents that should not appear in the search results.

How do I call up the robots.txt file?

The robots.txt can be called up very easily because it is available by default in the Main directory of your website. All you have to do is enter the URL of your domain and append /robots.txt at the end.

Example: https://deine-website.de/robots.txt

If the file exists, it will be displayed in the browser as simple text. There you can see all the rules for search engine crawlers, such as User-agent, robots.txt disallow all or Allow. The dynamically generated WordPress robots.txt is also displayed in this way.

If you want to edit or create your robots.txt, this is usually done via the web server, your content management system in the root directory of the website or via a WordPress plugin. Editing with the help of a WordPress plugin is the easiest way and is also suitable for anyone without a technical background. After the adaptation, the edited file can be accessed immediately via the URL and search engines can recognize the new instructions the next time they crawl.

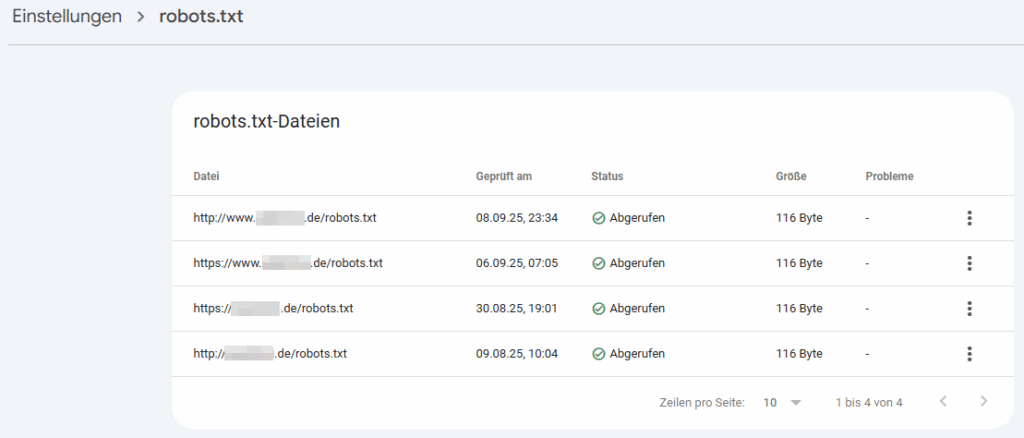

Worth knowing: robots.txt tester

A robots.txt Tester is a tool that you can use to check whether your file is set up correctly and whether the rules actually work the way you want them to. For example, you can use it to check whether certain pages or directories are blocked or allowed for crawlers.

You can test your robots.txt with the following tools, for example:

- Google Search Console

- Bing Webmaster Tools

- Rank Math robots.txt tool

- SE Ranking robots.txt Tool

There are numerous free tools for testing robots.txt. The ones listed are the simplest options based on my experience.

How do I edit the robots.txt?

If you have not yet created your own robots.txt for your WordPress website and are using the dynamically generated robots.txt, you cannot yet access and edit this file.

In this case, I recommend one of the two ways:

- Manual creation: Create a robots.txt manually with a free tool like Notepad++ or Sublime. Upload this file to the root directory of your website.

- SEO pluginsWith free SEO plugins such as Rank Math or Yoast, you can edit your robots.txt conveniently from your backend.

Create and edit robots.txt manually

1. Create robots.txt file

- Open a program like Sublime or Notepad ++

- Insert the content of your desired robots.txt there

- Save the file as a TXT file with the name robots.txt

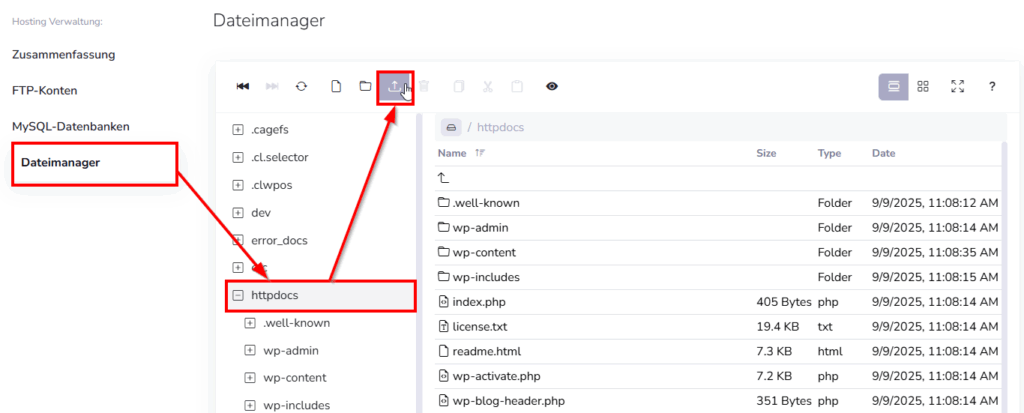

2. access the root directory via hosting

Note: These instructions are limited to the surface of WPspace and varies from hoster to hoster.

- Log in to your Customer dashboard a

- Click on Manage tariff to access your WordPress administration

- Select "My hosting" in the top tab

- Select "File manager" under the hosting administration and navigate to your root directory, usually "httpdocs"

- Click on Upload and upload the created robots.txt

Alternatively, you can also use an FTP user and FTP tool such as Filezilla to access the main directory of your website and upload your created file.

Tip 💡: If you are using WordPress hosting from WPspace, you can contact the support team at any time for assistance with editing your robots.txt. You can also find instructions on how to do this in our Help Center.

Edit robots.txt with plugins

The easiest way to edit your robots.txt is with the help of an SEO plugin. Editing the robots.txt is already a free function of various plugins. This allows you to quickly and easily edit the robots.txt directly in your WordPress dashboard without having to open and edit the file manually.

I recommend the following WordPress plugins:

1st Rank Math:

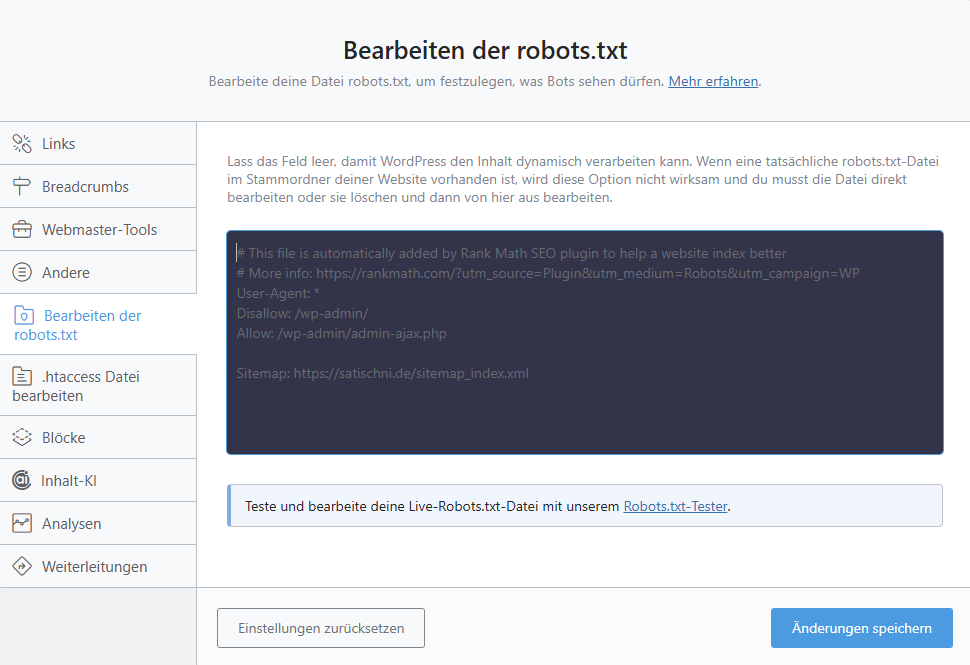

Rank Math is one of the most popular SEO plugins and is also our recommendation for SEO WordPress plugins. To edit your robots.txt with Rank Math, first log in to your WordPress dashboard. Then select "Rank Math SEO" > "General settings" > "Edit robots.txt". You can now insert your new code here.

2. YOAST SEO:

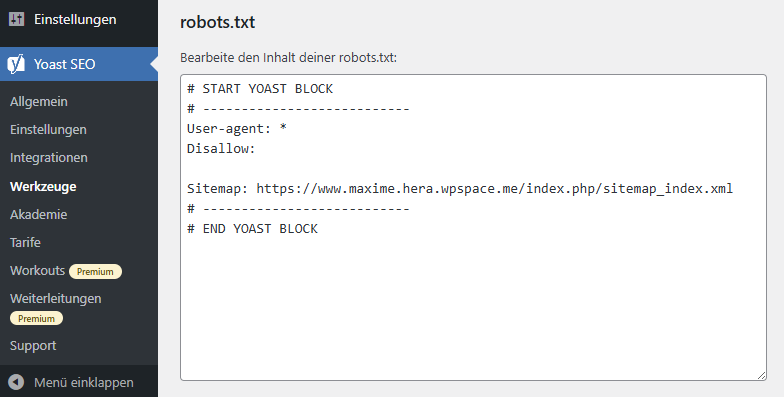

With 10+ million active installations (as of 08.09.2025), YOAST SEO is the most widely used SEO plugin in the world. You also have the option to edit your robots.txt natively here. To do this, navigate to "YOAST SEO" > "Tools" > "File Editor". You can then create a robots.txt file with one click and then edit it.

3. all-in-one SEO:

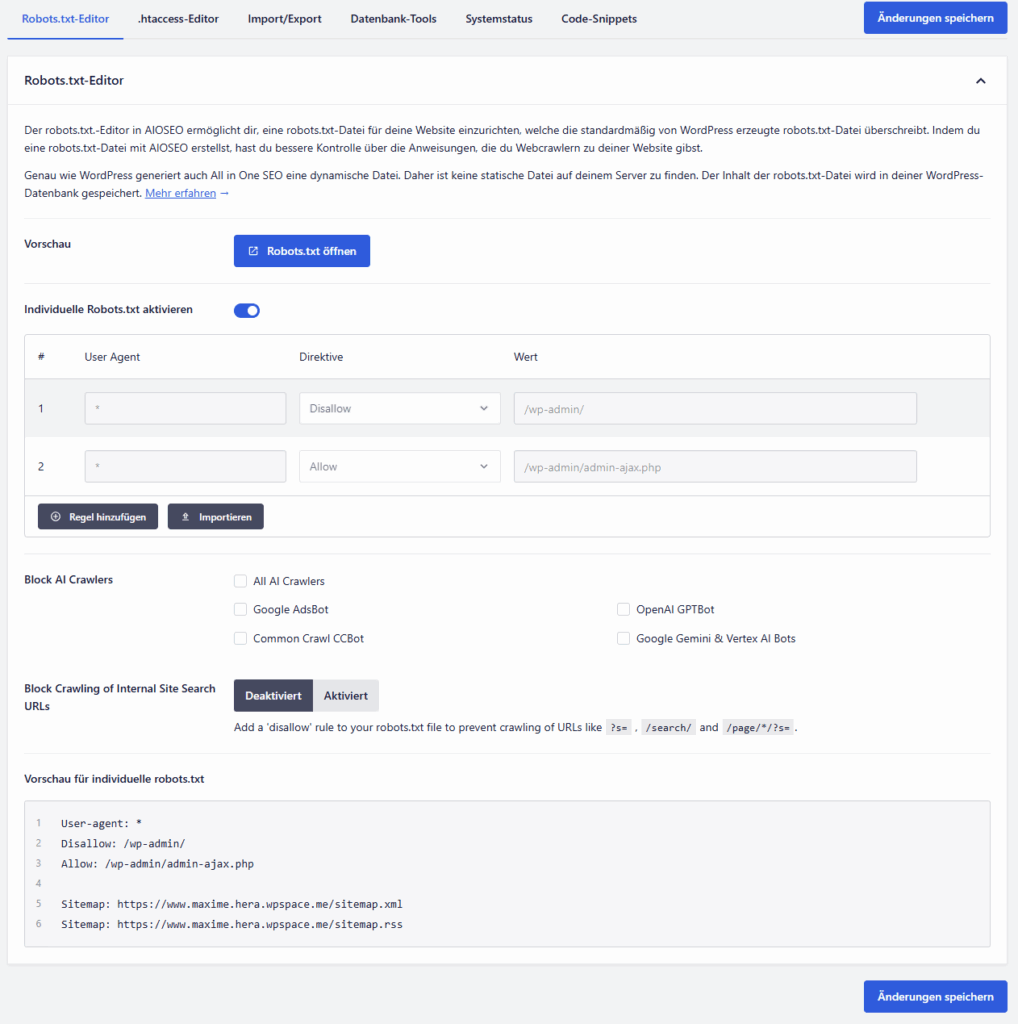

The SEO plugin "All in One SEO" offers probably the clearest interface for editing your robots.txt. To edit the file, navigate to "All in One SEO" > "Tools" > "robots.txt Editor" in your WordPress dashboard. You can then activate the individual robots.txt and add your desired rules.

If you are already using one of the plugins, I recommend that you do not install an additional plugin. Because every additional plugin makes WordPress slow and creates additional security risks.

Overall, editing your robots.txt with the help of a plugin is much easier, faster and error-free compared to manual editing.

Why is robots.txt important for SEO?

The robots.txt is a small, but very important Tool for SEObecause it helps search engine crawlers to search a website efficiently and index the relevant pages.

For example, they can be used to block admin areas, login pages or internal filter pages that are irrelevant for users and search engines. This prevents unnecessary or duplicate content from diluting the ranking or causing duplicate content problems.

In addition, robots.txt supports the optimization of the so-called crawl budget, i.e. the limited number of pages that search engines can search in a certain period of time. By excluding unimportant pages, crawlers can concentrate on the really relevant content and index it faster.

Sensitive areas such as internal test pages are also protected from indexing by robots.txt, which improves both the SEO performance and the security of the website. Without a correctly configured robots.txt, it can easily happen that important content is not crawled or unwanted content appears in the search results. Used correctly, robots.txt therefore significantly supports the visibility and efficiency of a website in search engines.

robots.txt is only one part of SEO

The robots.txt is only a small part of the overall search engine optimization. If you want to build up long-term visibility, you need a holistic SEO strategywhich takes into account technical basics as well as content and backlinks.

Especially companies that cannot or do not want to map their SEO tasks internally often rely on White Label SEO back. This makes it easy to outsource professional optimizations, while robots.txt forms an important basis for the technical control of crawling.

What happens if I don't have robots.txt?

If you have a WordPress website, you automatically have a standard robots.txt. However, this default file only regulates access to your admin area.

If you do not have robots.txt or have not made any manual adjustments, search engine crawlers can basically crawl all pages of your website. This means that there are no specific instructions as to which areas they should ignore and all content is potentially indexed.

In most cases, this is not a direct problem, especially for small or simple websites. However, problems can arise with larger websites or those with a lot of sensitive, unimportant or duplicate content:

Crawlers waste their crawl budget on unimportant pages, and unwanted content could appear in the search results. Duplicate content problems can also arise if similar content is available under multiple URLs.

In addition, crawlers can generate a lot of traffic on a website and thus utilization, which can significantly affect the performance of your website. The TikTok crawler in particular is known to crawl websites very aggressively. Depending on the scope and basis of the website, this can even lead to a failure.

In short: Without robots.txt, there is no targeted control of indexing. The search engines crawl the website freely, which can negatively affect the performance of your website, reduce the efficiency of crawling and impair SEO performance.

Alternatives to robots.txt

robots.txt is practical, but not always the best choice for keeping content away from search engines. Depending on the use case, there are better alternatives:

- noindex meta tag: Prevents the indexing of individual pages directly in the HTML code.

- nofollow meta tag: Ensures that search engines do not follow links on a page.

- HTTP header: A server-side solution that allows you to define indexing rules for entire file types.

- Password protection or .htaccessProtects sensitive areas (e.g. staging environments) reliably against unauthorized access.

robots.txt - a valuable tool

The robots.txt may seem inconspicuous, but it is a central tool for clean and efficient crawling of your WordPress website, which is part of search engine optimization. It allows you to control crawler access in a targeted manner, exclude unimportant or duplicate content from crawling and thus make optimum use of the crawl budget.

By restricting the crawlers, you minimize the risk of performance losses or even failures that can occur due to overly aggressive crawling. You should always monitor your logs and visitor statistics for anomalies.

At the same time, robots.txt protects sensitive areas of your website from appearing in search results. Although it does not replace security measures, when used correctly it makes a significant contribution to ensuring that search engines quickly identify and index the really relevant content on your website. If you use your robots.txt consciously, you create the basis for better visibility, faster performance and a sustainable SEO foundation.